GraphQL Performance: A Practical Guide to DataLoader

GraphQL’s flexibility and efficiency can sometimes lead to performance issues, particularly the N+1 problem, where multiple redundant data fetches degrade performance. DataLoader is a utility designed to address this challenge by batching and caching requests within a single query.

By collecting all required keys and sending a single optimized request to the data source, DataLoader significantly improves query efficiency, reduces database load, and makes your GraphQL APIs faster and more scalable.

This article explores how DataLoader works and how to implement it effectively in your GraphQL servers.

What is the N+1 Problem in GraphQL

The N+1 problem in GraphQL occurs when a query that requests nested or related data results in multiple redundant database or API calls. For example, if a query requests a list of items (N) and then requests additional data for each item individually, it causes 1 query to fetch the list plus N queries to fetch related data, resulting in N+1 total requests.

This inefficiency leads to increased latency, higher resource usage, and poor performance as the number of items grows. The problem arises because each nested field triggers separate data fetches instead of a single optimized batch request. Addressing the N+1 problem is crucial for scaling GraphQL APIs effectively and improving response times.

What is GraphQL DataLoader?

GraphQL DataLoader is a generic utility designed to improve the efficiency of data fetching in GraphQL servers. It batches multiple similar requests into a single query and caches the results within the scope of a single request.

This approach reduces redundant database or API calls, solving the common N+1 problem in GraphQL. By collecting all requested keys during a query execution and dispatching one optimized request, DataLoader enhances performance, reduces latency, and simplifies resolver logic in GraphQL APIs.

Setting Up DataLoader in a GraphQL Server

To effectively set up DataLoader in a GraphQL server, follow these essential steps:

- Install the DataLoader package using npm or your package manager.

- Define a batch loading function that accepts an array of keys and returns a promise resolving to an array of corresponding results.

- Create DataLoader instances by passing the batch loading function.

- Instantiate DataLoader objects per request to maintain isolated caches and avoid data leakage.

- Integrate DataLoader instances into the GraphQL context so resolvers can access them during query execution.

- Modify resolvers to use the DataLoader’s load method to fetch data, enabling batching and caching transparently.

- This setup reduces redundant and repeated database or API calls, solving the N+1 problem.

Example

const DataLoader = require('dataloader');

const batchUsers = async (userIds) => {

const users = await db.getUsersByIds(userIds);

return userIds.map(id => users.find(user => user.id === id));

};

const createLoaders = () => ({

userLoader: new DataLoader(batchUsers),

});

const context = () => ({

loaders: createLoaders(),

});

// Usage in resolvers

const resolvers = {

User: {

friends: (user, args, context) => {

return user.friendIds.map(id => context.loaders.userLoader.load(id));

},

},

};

Implementing Batching and Caching with DataLoader

DataLoader’s core functionality revolves around batching multiple requests and caching their results to optimize data fetching in GraphQL. When multiple data requests occur during a single GraphQL query execution, DataLoader collects all requested keys and batches them into a single request to the data source.

This batch loading function receives the array of keys and returns a promise that resolves with results matching the order of those keys, ensuring correct mapping between requests and responses.

In addition to batching, DataLoader caches fetched results during the lifecycle of the request. If the same key is requested again, DataLoader returns the cached data instead of triggering another fetch, avoiding redundant calls.

This caching mechanism is local to the request context and does not persist beyond it, ensuring fresh data for each new client request.

Together, batching and caching drastically reduce the number of calls made to databases or external APIs, improving overall performance and preventing the common N+1 problem in GraphQL. Developers can also customize the timing of batch dispatches and cache behavior to fine-tune performance according to specific application needs.

By leveraging DataLoader’s batching and caching capabilities, GraphQL APIs can serve data more efficiently, reduce server load, and deliver faster response times to clients.

Practical Example: Building a GraphQL API with DataLoader

To illustrate how DataLoader improves GraphQL API performance, consider a basic example involving users and their posts. Without DataLoader, a request to fetch users along with their posts might trigger multiple database calls, a typical N+1 problem scenario.

First, define a batch loading function that accepts an array of user IDs and fetches all related posts in one optimized query. Then, create a DataLoader instance with this batch function. In the GraphQL resolvers, instead of directly querying posts for each user, use DataLoader’s load method with the user ID. DataLoader batches these load calls and caches results within the request lifecycle.

For instance, if you query multiple users and their posts, DataLoader aggregates all user IDs and fetches corresponding posts in a single call, significantly reducing the database load. This method not only enhances performance but also simplifies resolver logic.

Here is a simplified code snippet:

const DataLoader = require('dataloader');

// Batch function fetching posts by multiple user IDs

const batchPostsByUserIds = async (userIds) => {

const posts = await db.getPostsByUserIds(userIds);

return userIds.map(id => posts.filter(post => post.userId === id));

};

// Create DataLoader instance for posts

const postsLoader = new DataLoader(batchPostsByUserIds);

// GraphQL resolver for User.posts field

const resolvers = {

User: {

posts: (user, args, context) => {

return context.loaders.postsLoader.load(user.id);

},

},

};

This example demonstrates how DataLoader effectively batches and caches requests, solving the N+1 query problem and boosting GraphQL API efficiency.

Advanced Usage and Best Practices

To ensure optimal use of DataLoader and improve GraphQL API performance, consider the following best practices:

- Create new DataLoader instances per request to isolate caches and prevent data leakage between users.

- Prime caches across related loaders when loading entities via multiple keys (e.g., by ID and username) to keep the cache consistent.

- Customize batch scheduling to optimize when batch functions trigger based on application-specific needs.

- Handle errors in batch functions gracefully to allow partial successes and failures without breaking the entire load.

- Utilize breadth-first loading strategies for nested or federated schemas to efficiently batch data across subgraphs.

- Monitor and test DataLoader performance under load to identify bottlenecks and improve cache effectiveness.

- Keep resolver logic clean and maintainable by centralizing batching and caching using DataLoader.

- Understand trade-offs and stay updated with emerging patterns like breadth-first loading or alternative batching algorithms to optimize GraphQL server performance.

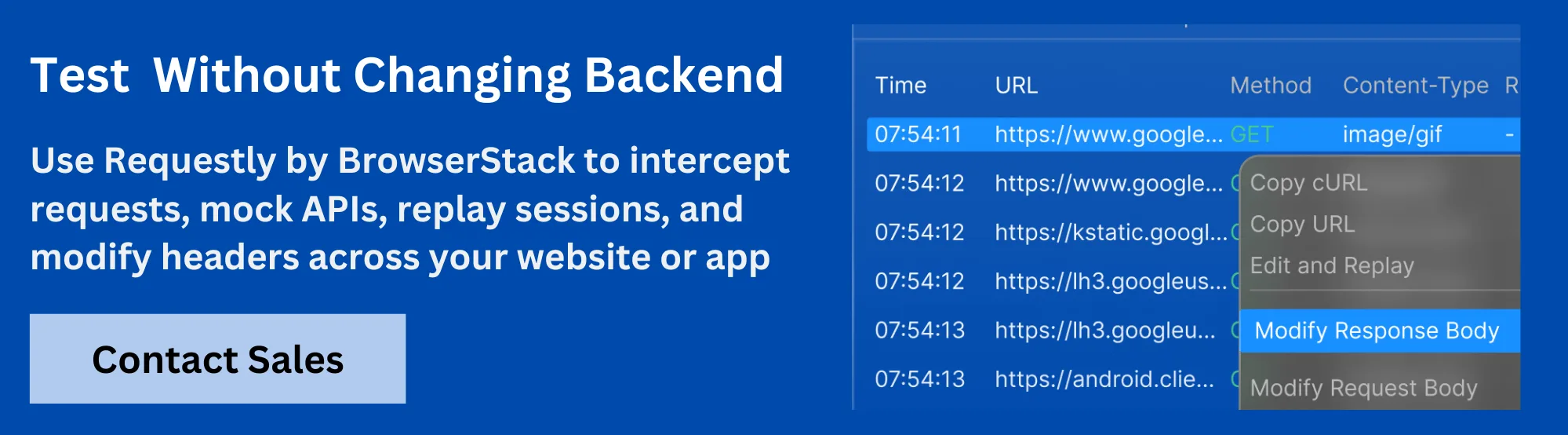

Enhancing GraphQL Development with Requestly HTTP Interceptor

Requestly HTTP Interceptor by BrowserStack is a versatile, open-source tool that empowers developers to intercept, modify, and debug network requests, including GraphQL API calls in real-time. It enhances productivity by allowing precise control over API requests and responses directly in the browser, simplifying complex development and testing workflows.

- Modify API Responses: Change API response data on the fly to simulate backend behaviors or test frontend features without altering the backend.

- Custom Headers & Redirects: Add or modify HTTP headers and redirect requests to different URLs or environments for seamless testing and debugging.

- Mock API Responses: Create mock GraphQL responses to test edge cases and failure scenarios without relying on a live backend.

- Inject Custom Scripts and Style: Inject JavaScript or CSS into web pages to dynamically adjust page behavior during development or testing.

- Session Recording and Replay: Capture and replay network sessions to help teams reproduce and troubleshoot complex GraphQL issues collaboratively.

- Rule-based Interception: Apply interception rules based on URL patterns, methods, and response types for precise control over which requests are modified.

- Cross-Browser and System-wide Support: Available as browser extensions and a desktop app, enabling interception across browsers, devices, and apps to fit any development setup.

These features streamline GraphQL workflows, speeding up debugging, testing, and API iteration with greater flexibility and precision.

Conclusion

Effectively implementing REST API design best practices ensures the development of APIs that are intuitive, secure, and scalable.

By following key principles such as consistent resource naming, appropriate use of HTTP methods, proper status code handling, versioning, and thorough documentation, developers can create APIs that deliver excellent performance and a great developer experience.

Additionally, leveraging powerful tools like Requestly HTTP Interceptor by BrowserStack can further enhance API development and testing. Requestly simplifies debugging, allows real-time request and response modifications, supports mocking scenarios, and improves collaboration among teams.

Integrating these best practices with advanced tools leads to robust, maintainable, and efficient APIs that meet evolving business and technical requirements.

Contents

- What is the N+1 Problem in GraphQL

- What is GraphQL DataLoader?

- Setting Up DataLoader in a GraphQL Server

- Implementing Batching and Caching with DataLoader

- Practical Example: Building a GraphQL API with DataLoader

- Advanced Usage and Best Practices

- Enhancing GraphQL Development with Requestly HTTP Interceptor

- Conclusion

Subscribe for latest updates

Share this article

Related posts