What is gRPC: Meaning, Benefits, and Architecture

gRPC is an open-source remote procedure call framework that enables services to communicate over a network by calling methods on remote servers as if they were local. It uses HTTP/2 and Protocol Buffers (Protobuf) to provide low-latency, efficient, and strongly typed communication suitable for modern distributed systems.

The framework is now maintained by the open-source community and is part of the Cloud Native Computing Foundation (CNCF). This ensures ongoing development, multi-language support, and reliability for microservices and real-time applications.

This article covers gRPC’s meaning, benefits, architecture, implementation, and key use cases.

Understanding gRPC

gRPC lets applications call methods on remote servers as if they were local. It abstracts network communication, so developers focus on defining services and messages. It uses Protocol Buffers (Protobuf) for compact, strongly typed, and consistent data exchange across languages.

The framework supports unary calls, client streaming, server streaming, and bidirectional streaming. HTTP/2 enables multiplexed connections, reducing latency and improving performance. gRPC also includes features for deadlines, cancellations, and error handling to make services more reliable.

The Benefits of Using gRPC

gRPC offers performance, reliability, and consistency for modern service communication. It reduces latency, ensures strongly typed messages, and supports real-time streaming. These advantages make it suitable for distributed systems and microservices.

Here are the main benefits of gRPC:

- High performance: gRPC uses HTTP/2 and a compact binary protocol, which reduces payload size and network overhead. This allows faster request-response cycles and lower latency compared with traditional REST APIs.

- Strong typing and contracts: Protocol Buffers enforce a defined structure for requests and responses. This prevents mismatched data types and runtime errors, making updates and versioning across services safer and more predictable.

- Cross-language support: gRPC can generate client and server code in multiple languages, including Java, Python, Go, and C++. This allows teams to develop services in the language best suited to their requirements without worrying about compatibility issues.

- Streaming support: gRPC supports client-side, server-side, and bidirectional streaming. This enables continuous data transfer for real-time applications such as live dashboards, chat apps, telemetry, or IoT devices, reducing the need for repeated requests.

- Reliable communication: Built-in support for deadlines, cancellations, and standardized error handling ensures that services respond predictably. This helps developers manage failures gracefully and maintain stability in complex distributed systems.

Key Features of gRPC

gRPC includes several features that make it suitable for modern distributed systems. These features focus on performance, reliability, and developer productivity. Understanding them helps in designing robust and maintainable services.

Here are the core features of gRPC:

- Service definition using Protocol Buffers: Protobuf files define service methods and message structures clearly. This ensures consistent communication across services and reduces the chance of integration errors. It also enables code generation for multiple languages automatically.

- HTTP/2 transport: gRPC uses HTTP/2, which supports multiplexed streams, header compression, and server push. This reduces network overhead, allows multiple simultaneous calls over a single connection, and improves performance for high-volume systems.

- Synchronous and asynchronous calls: Developers can choose between blocking calls that wait for a response or non-blocking calls that continue execution. This flexibility allows services to handle different workloads efficiently.

- Built-in error handling: Standardized status codes make it easier to handle and propagate errors consistently. Services can manage timeouts, cancellations, and failures in a structured way.

- Pluggable authentication: gRPC supports secure communication using TLS, token-based authentication, and custom security implementations. This ensures data protection and reliable access control between services.

- Streaming support: gRPC supports client, server, and bidirectional streaming, enabling continuous and real-time data flows. This is ideal for live monitoring, chat applications, and telemetry systems.

Key Use Cases for gRPC

gRPC is well-suited for scenarios that require high performance, reliable communication, and structured data exchange. It is widely adopted in microservices, real-time applications, and multi-language environments.

Below are common use cases for gRPC:

- Microservices communication: gRPC enables fast and efficient communication between internal services. Strongly typed contracts and low-latency messaging reduce integration errors and improve overall system reliability.

- Real-time data streaming: gRPC’s streaming support allows continuous data transfer from server to client, client to server, or bidirectionally. This is useful for live dashboards, telemetry systems, and chat applications.

- Cross-platform APIs: Services built with gRPC can be consumed by clients in different languages without compatibility issues. This ensures consistent behavior across web, mobile, and desktop applications.

- Authentication and authorization between services: gRPC supports secure communication with TLS and token-based authentication, enabling reliable identity management across distributed services.

- Low-latency, high-volume systems: Applications that require fast response times and handle a large number of requests, such as online gaming or financial trading platforms, benefit from gRPC’s efficient protocol.

gRPC Architecture Explained

gRPC follows a client-server architecture, where the client makes requests and the server processes them. Both rely on a shared contract defined using Protocol Buffers (Protobuf), which specifies the methods and message formats.

HTTP/2 acts as the transport layer, handling connections, multiplexed streams, and efficient data transfer. It allows multiple requests and responses to flow simultaneously over a single connection, reducing latency and improving performance.

Here are the main components explained:

- Client: The client calls remote methods as if they were local functions. It automatically serializes requests into Protobuf messages and deserializes responses. This simplifies communication and ensures data is always in the correct format.

- Server: The server implements the service methods defined in the Protobuf contract. It handles requests, processes data, and sends back responses. Servers can manage multiple clients efficiently using HTTP/2 features like multiplexing.

- Protobuf definitions: These files define the services, request types, and response types. They act as a strict contract, ensuring that all messages are strongly typed and backward-compatible, making versioning easier.

- HTTP/2 transport layer: This layer manages connections between clients and servers. It supports multiple streams over one connection, reduces overhead, compresses headers, and enables bidirectional communication for streaming scenarios.

- Optional middleware: Middleware can be added for logging, authentication, monitoring, or error handling. It helps enhance security, observability, and maintainability without changing the core application logic.

Setting Up and Implementing gRPC in Your Project

Implementing gRPC involves defining services, generating code, building client and server applications, and testing communication. Each step ensures services remain consistent, efficient, and maintainable.

1. Install the required tools

Start by setting up the necessary libraries and compilers for your environment. You need the language-specific gRPC library for both server and client. Examples include grpc for Python, grpc-java for Java, and grpc-go for Go. You also need the Protocol Buffers compiler (protoc) to generate client and server code from .proto files.

2. Define service contracts using Protobuf

Create .proto files that define the service methods, request messages, and response messages. Include field types, method names, and any streaming options such as unary, client streaming, server streaming, or bidirectional. These files act as a strict contract between the client and server and ensure strong typing and backward compatibility.

3. Generate client and server code

Use the protoc compiler to generate client and server stubs in your chosen programming language. These stubs handle serialization and deserialization automatically, reducing the chance of errors. The generated code provides ready-to-use classes or functions for making RPC calls and handling responses.

4. Implement server logic

Build the server by implementing the methods defined in the Protobuf contract. Process incoming requests, apply business logic, and send back responses. Use built-in gRPC features like deadlines, cancellations, and streaming to enhance performance and reliability.

5. Build the client application

Use the generated client code to call server methods. The client automatically serializes requests and deserializes responses. You can also handle errors, set timeouts, and use streaming features as needed.

6. Test and monitor

Verify that all endpoints function correctly under different scenarios, including normal operation, high load, and edge cases. Test error handling to ensure the service responds correctly to invalid requests, timeouts, or cancelled calls. Validate streaming behavior for client, server, and bidirectional streams to ensure data is sent and received reliably.

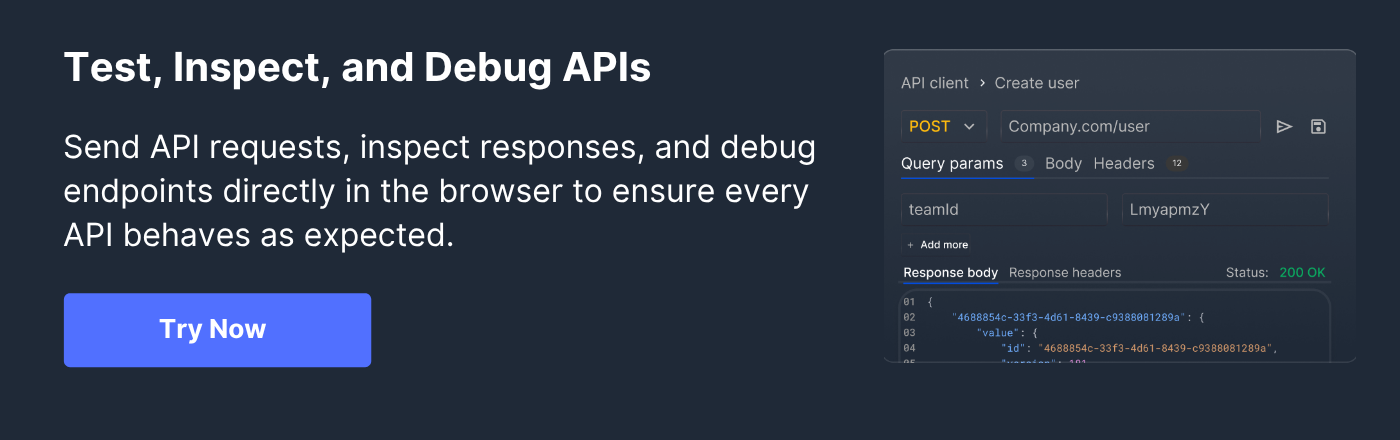

Use Requestly to simulate requests, inspect responses, and validate workflow logic without affecting production systems. This allows teams to catch integration issues early, verify error handling, and ensure consistent behavior across different clients and services.

Comparing gRPC with RESTful APIs

gRPC and REST APIs both enable communication between services, but they differ in protocol, performance, and data handling. Understanding these differences helps in choosing the right approach for your application needs.

gRPC uses HTTP/2 and a binary protocol, which reduces payload size, supports multiplexed streams, and lowers latency. REST typically uses HTTP/1.1 with JSON, which is text-based, larger in size, and less efficient for high-volume or real-time communication.

Here are the key differences:

- Protocol and data format: gRPC uses HTTP/2 and Protobuf for compact, strongly typed messages. REST uses HTTP/1.1 and JSON, which is human-readable but larger and less efficient.

- Performance: gRPC offers lower latency and higher throughput due to binary serialization and multiplexed connections. REST may be slower in high-volume systems because each request opens a new connection.

- Communication patterns: gRPC supports unary and streaming calls, including bidirectional streaming. REST is limited to request-response patterns unless paired with WebSockets.

- Error handling: gRPC provides standardized status codes and built-in error handling. REST relies on HTTP status codes and custom error formats.

- Versioning and contracts: Protobuf in gRPC enforces strict contracts and backward compatibility. REST APIs often require careful management of endpoint changes to avoid breaking clients.

How Requestly Can Help Test gRPC APIs

Testing gRPC APIs requires handling binary protocols, inspecting messages, and simulating different scenarios without affecting production. Requestly provides a simple way to achieve this while giving visibility into requests and responses.

Requestly allows teams to intercept gRPC calls and inspect the request and response payloads in real time. This helps verify that messages follow the Protobuf contract and that services behave as expected under different conditions.

Here are a few ways Requestly helps test gRPC APIs:

- Intercept and Inspect gRPC Requests: Capture gRPC requests and examine request and response payloads in real time. Verify that messages follow the Protobuf contract and ensure services behave as expected.

- Simulate Different Scenarios: Modify requests to test edge cases, error handling, or alternative workflows. Mock server responses to test client behavior without a live backend.

- Monitor Performance and Reliability: Track latency, throughput, and resource usage to identify bottlenecks and ensure gRPC services meet performance requirements.

- Validate Streaming Behavior: Test client, server, and bidirectional streams to ensure data flows correctly and reliably in real-time applications.

Conclusion

gRPC is a high-performance, open-source framework that enables efficient communication between distributed services. It uses HTTP/2 and Protocol Buffers to provide low-latency, strongly typed, and reliable message exchange.

Requestly helps test gRPC APIs by giving visibility into requests and responses, simulating scenarios, and validating streaming and error-handling behavior. It ensures that services function correctly, perform reliably, and maintain strong typing across different clients and environments.

Contents

- Understanding gRPC

- The Benefits of Using gRPC

- Key Features of gRPC

- Key Use Cases for gRPC

- gRPC Architecture Explained

- Setting Up and Implementing gRPC in Your Project

- 1. Install the required tools

- 2. Define service contracts using Protobuf

- 3. Generate client and server code

- 4. Implement server logic

- 5. Build the client application

- 6. Test and monitor

- Comparing gRPC with RESTful APIs

- How Requestly Can Help Test gRPC APIs

- Conclusion

Subscribe for latest updates

Share this article

Related posts