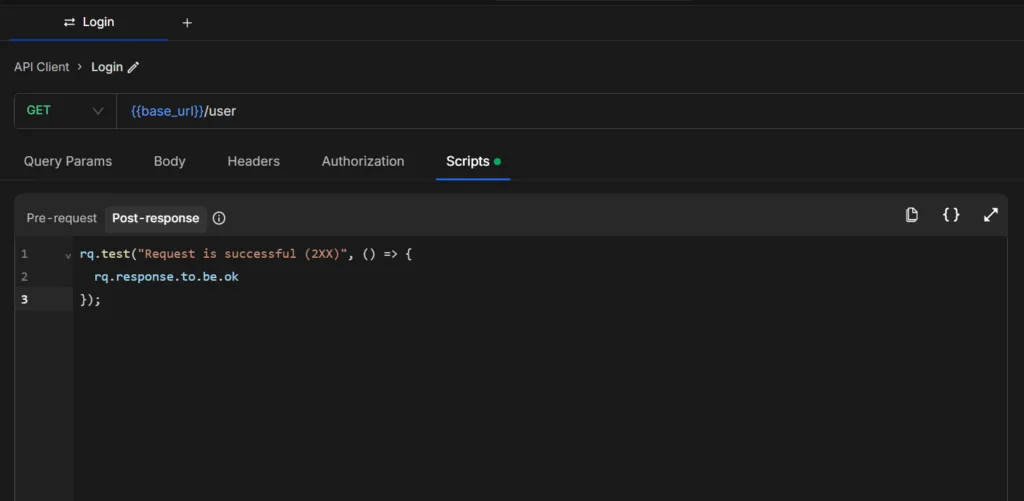

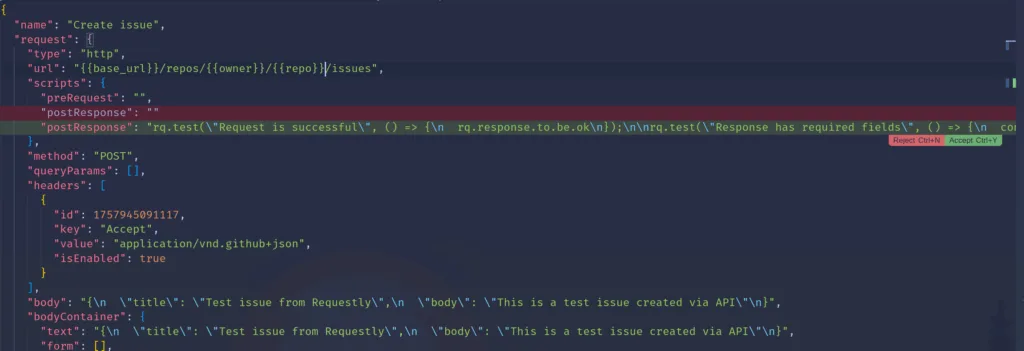

Cursor will now go ahead and add tests to all the requests.

This process has a disadvantage that you won’t be able to verify the tests before Cursor writes them to the file. So, be sure to check that the tests run properly before commiting them.

Best Practices for AI-Generated API Testing

AI-assisted testing works best when you set it up for success. Cursor and Requestly make the workflow faster and safer, but the quality of your tests still depends on how you guide and maintain the process. Here are some best practices to keep your testing reliable over time:

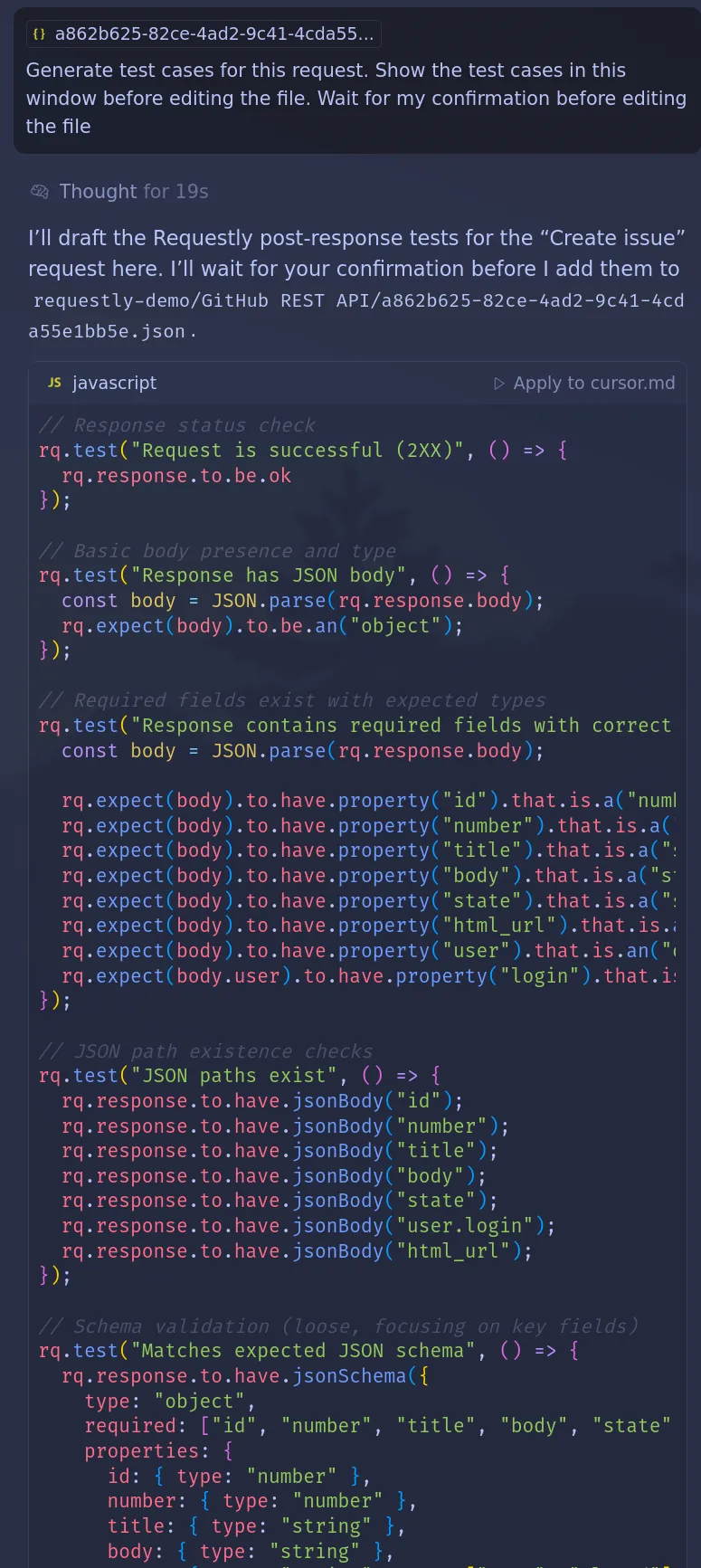

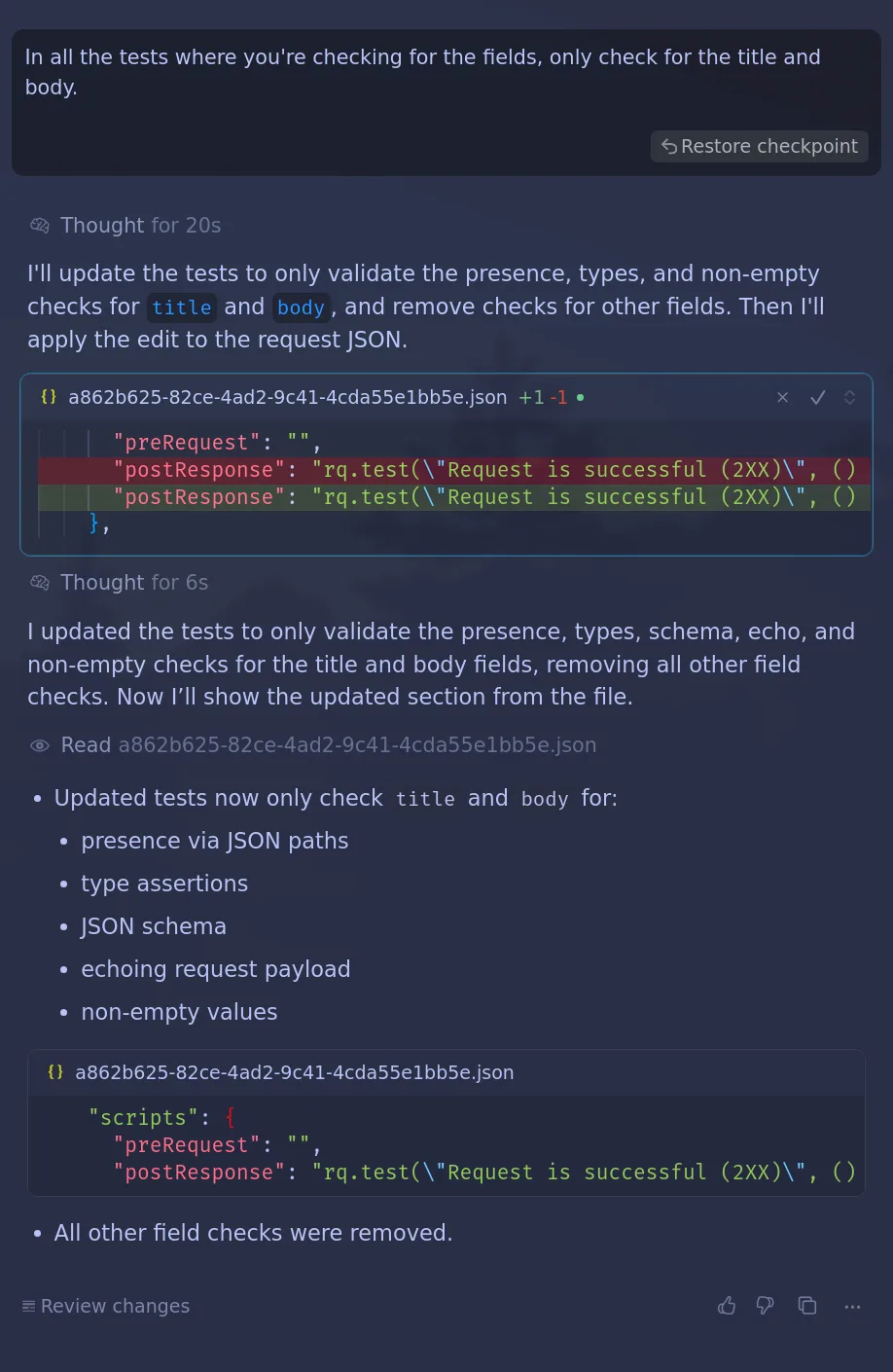

Write Clear Prompts for Cursor

Cursor will only be as precise as the instructions you provide. Don’t just say “write a test”. Instead, specify the fields you care about, the types of responses you expect, and whether you want edge or negative cases included. For example:

“Generate tests for this request. Check for a 200 status, verify title and body fields exist, and add one negative test for unauthorized access.”

The more context you give, the less cleanup you’ll need later. You can also add repetitive prompts to the project rules so that they’re applied every time.

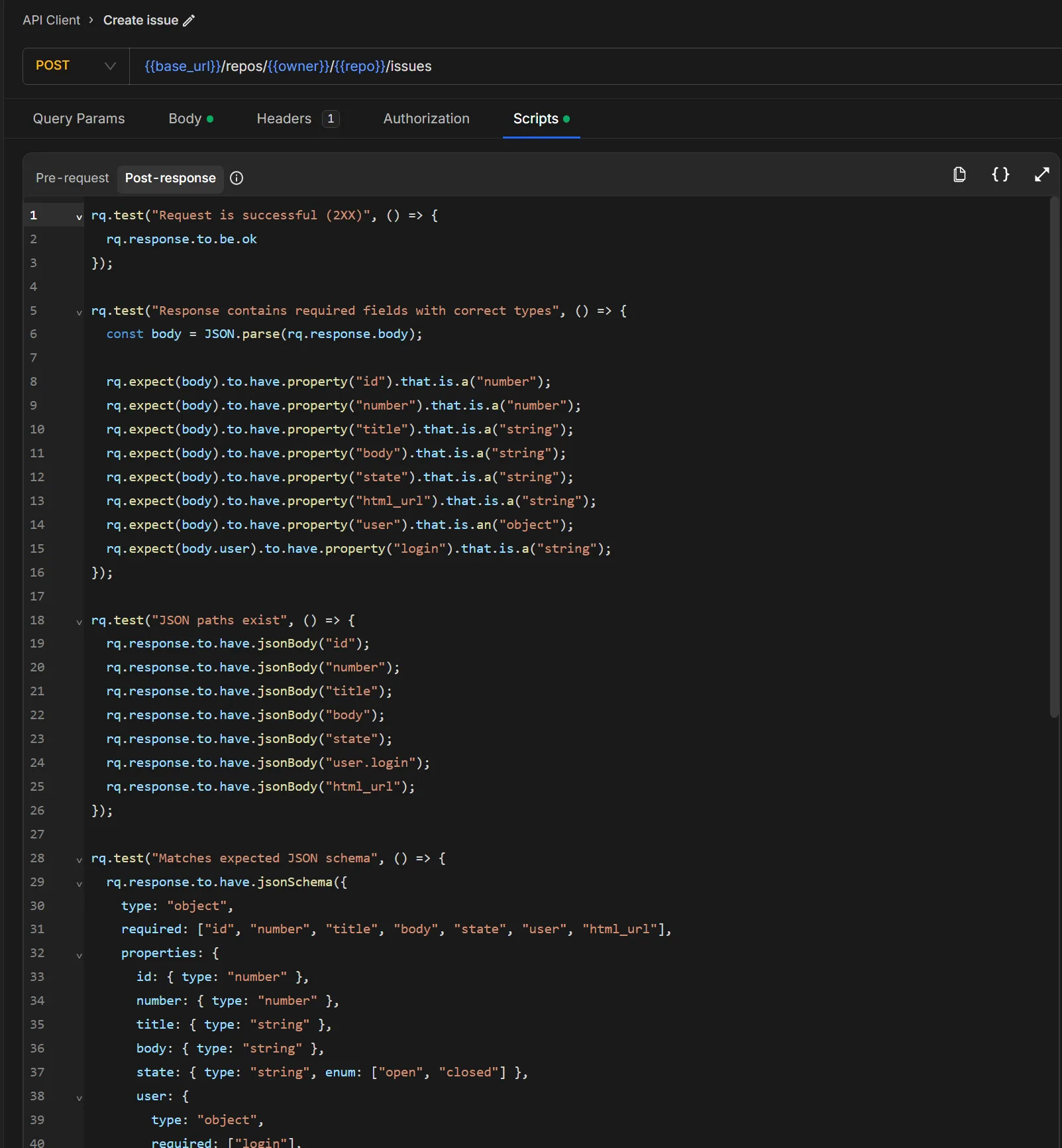

Always Review and Validate AI Output

Even with good prompts, AI may generate redundant, flaky, or irrelevant tests. Treat Cursor’s output as a draft: review it, remove what you don’t need, and refine. Requestly makes this easy since tests are just JSON with embedded scripts — you can see exactly what changed before committing.

Use Requestly Mocks and Intercepts for Safer Testing

Not every test should hit the real API. For failure cases, like 500 errors or rate limits, use Requestly’s mocks and intercepts to simulate responses.

Keep Secrets Local

Never paste API tokens, passwords, or other secrets into Cursor prompts. Store them in Requestly environments and variables instead. This way, you can safely run AI-generated tests without exposing credentials. Use development credentials in the development environment and put the Requestly environments folder in gitignore to prevent accidentally committing them.

Version Control Your Tests

Because Requestly stores collections and tests as JSON files, you can commit them directly to Git. This means your team can review, track history, and collaborate on test changes just as they do with application code. Each AI-generated test case can be peer-reviewed before merging — ensuring quality and accountability as your test suite scales.

With this setup, teams can save a huge chunk of testing time, especially when managing multi-endpoint APIs with frequent updates.

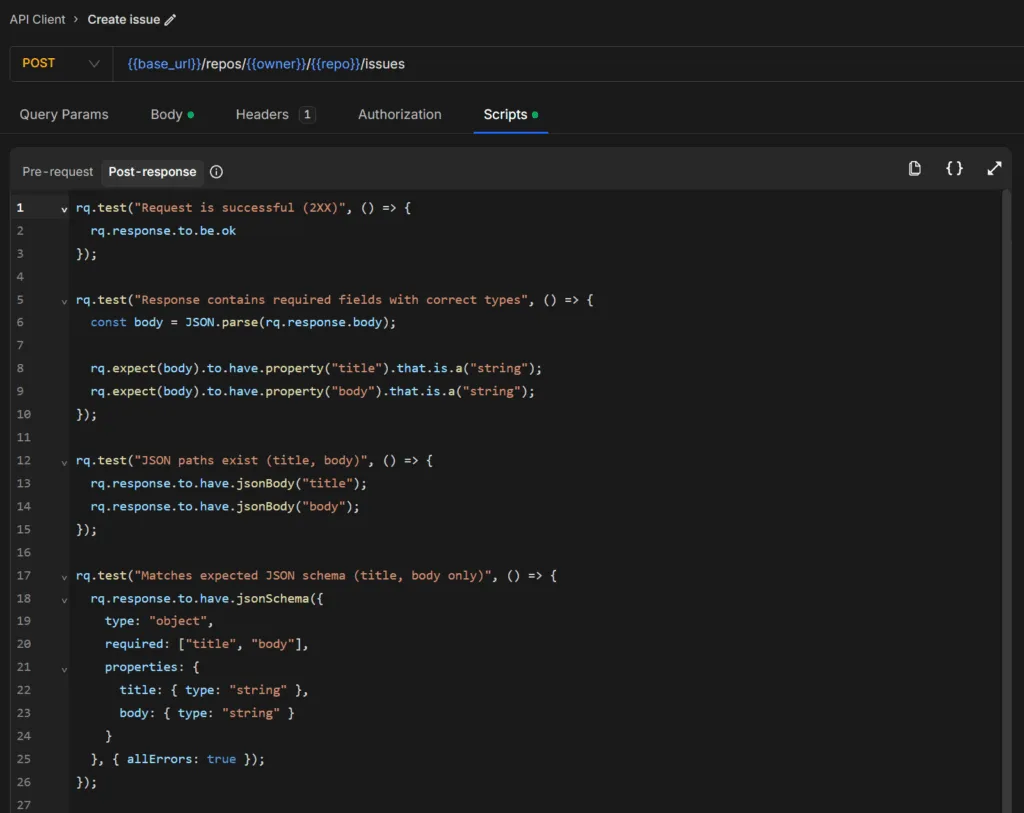

Iterate Instead of One-Shot Generation

Don’t expect perfect tests on the first try. Use Cursor iteratively: generate, review, adjust prompts, regenerate. Over time, you’ll build a solid suite of reusable tests tuned to your API’s quirks.

Combine AI Speed with Human Insight

AI is great at generating lots of cases quickly, but you know your API’s edge cases, failure modes, and business logic better than any model. Utilize AI for coverage and scaffolding, then supplement with human-crafted tests for the scenarios that truly matter.

Conclusion

By combining Cursor and Requestly, you can cut down on repetitive test writing while improving API test coverage. Cursor helps you quickly generate comprehensive test cases, while Requestly ensures those tests run securely in a local-first environment.

The result: faster iteration, stronger reliability, and safer execution. If you’re looking to modernize your API testing workflow, give this combination a try — you’ll spend less time writing boilerplate tests and more time building what matters.