Chunked Transfer Encoding Explained

Your lightweight Client for API debugging

No Login Required

Requestly is a web proxy that requires a desktop and desktop browser.

Enter your email below to receive the download link. Give it a try next time you’re on your PC!

Modern web applications often deal with unpredictable or dynamic data. When a server cannot determine the full size of a response ahead of time, traditional methods like setting a Content-Length header fall short. This is where chunked encoding comes into play.

As a built-in feature of HTTP/1.1, chunked encoding allows data to be sent in segments, making it a critical part of efficient content delivery in APIs, media, and real-time applications.

What Is Chunked Encoding in HTTP?

Chunked encoding is a transfer mechanism that breaks down a response body into smaller, independent pieces called chunks. Each chunk is preceded by its size in hexadecimal, followed by the data and a delimiter. The server sends these chunks sequentially, allowing the client to begin processing the response as it arrives.

This approach eliminates the need to calculate or declare the full size of the response beforehand. The stream concludes when the server sends a zero-length chunk, signaling the end of the message.

Why Does Chunked Encoding Matter?

Delayed rendering or blocked responses can degrade user experience in today’s applications. Chunked encoding enables:

- Progressive rendering: Clients can start displaying content before the full response is available.

- Dynamic data delivery: Useful in situations where response size is unknown, like live updates or generated content.

- Reduced latency: Chunks are transmitted as they’re generated, minimizing wait times.

- Efficient resource usage: Memory consumption stays lower since data isn’t held until completion.

For APIs, streaming services, and microservices, chunked encoding is not just a protocol feature, it’s a performance enabler.

How Chunked Transfer Works in HTTP/1.1

In HTTP/1.1, chunked transfer encoding allows servers to stream data in segments rather than sending it all at once. This is especially useful when the total response size is unknown or the content is being generated dynamically.

Here’s how the process works:

1. The server adds the header: Transfer-Encoding: chunked

2. The response body is divided into chunks, each sent with:

- A line specifying the chunk size in hexadecimal

- The actual chunk data

- A carriage return and line feed (\r\n)

3. The transmission ends with a final chunk of size 0, followed by a blank line

Chunked transfer lets servers send data as it’s generated, reducing wait times and avoiding full buffering. Clients can process each chunk immediately.

Anatomy of a Chunked Response (With Header and Payload Example)

A chunked HTTP response follows a predictable structure. Here’s what it typically looks like:

HTTP/1.1 200 OK

Transfer-Encoding: chunked

Content-Type: text/plain

7\r\n

Example\r\n

6\r\n

Output\r\n

0\r\n

\r\n

Breakdown:

- 7\r\n: The size of the first chunk (7 bytes in hex)

- Example\r\n: The data payload for the first chunk

- 6\r\n: The second chunk’s size

- Output\r\n: The second chunk’s content

- 0\r\n: Zero-length chunk indicating end of message

- \r\n: Final delimiter to signal completion

This format ensures that data can be streamed without buffering the entire body in advance. Clients that support HTTP/1.1 can decode this structure and assemble the response seamlessly.

Key Differences: Chunked Encoding vs Content-Length

Both Content-Length and Transfer-Encoding: chunked are used to define how HTTP response data is sent, but they operate very differently.

Aspect | Content-Length | Chunked Encoding |

Requires knowing full size | Yes | No |

Header used | Content-Length | Transfer-Encoding: chunked |

Data delivery | Sent in one block | Sent in segments (chunks) |

Streaming support | Not suitable | Well-suited for streaming |

Termination | By byte count | By zero-length chunk |

- Use Content-Length when: You know the exact response size upfront.

- Use chunked encoding when: You’re sending dynamic or live content, or want to begin streaming immediately.

Real-World Use Cases Of Chunked Transfer Encoding

Here are some practical scenarios where chunked encoding is essential:

- Streaming Media and Large File Downloads: Allows audio, video, or software files to start playing or downloading immediately, improving user experience without waiting for the entire file.

- Real-Time Events and Live Feeds: Powers web applications that require continuous delivery of updates, such as live sports scores, financial tickers, or chat messages.

- Incremental API Responses: Helps APIs return partial data as soon as a portion is ready, reducing wait time for users when processing large datasets or slow backend services.

- Server-Sent Events (SSE): Utilized for pushing updates from server to client via HTTP, ideal for dashboards and real-time notifications.

- Web Scraping and Data Aggregation: Enables efficient harvesting of large volumes of web data, as scrapers can process information as it streams in.

Debugging Chunked HTTP Responses In Dev And QA Workflows

Below are effective practices for debugging chunked responses in development and QA workflows:

- Leverage network inspection tools like Requestly to view each HTTP chunk and examine for missing, corrupted, or delayed data.

- Test application resilience by intentionally cutting off, delaying, or modifying chunks to confirm the client can handle incomplete or out-of-order data safely.

- Analyze the latency and throughput between chunk deliveries to ensure timely updates and discover any inefficiencies in data streaming.

- Ensure user interfaces and backend components handle chunked responses correctly, such as progressively rendering content as chunks arrive.

- Integrate debugging and validation steps into automated test suites to catch issues related to chunk parsing and error handling early in the development cycle.

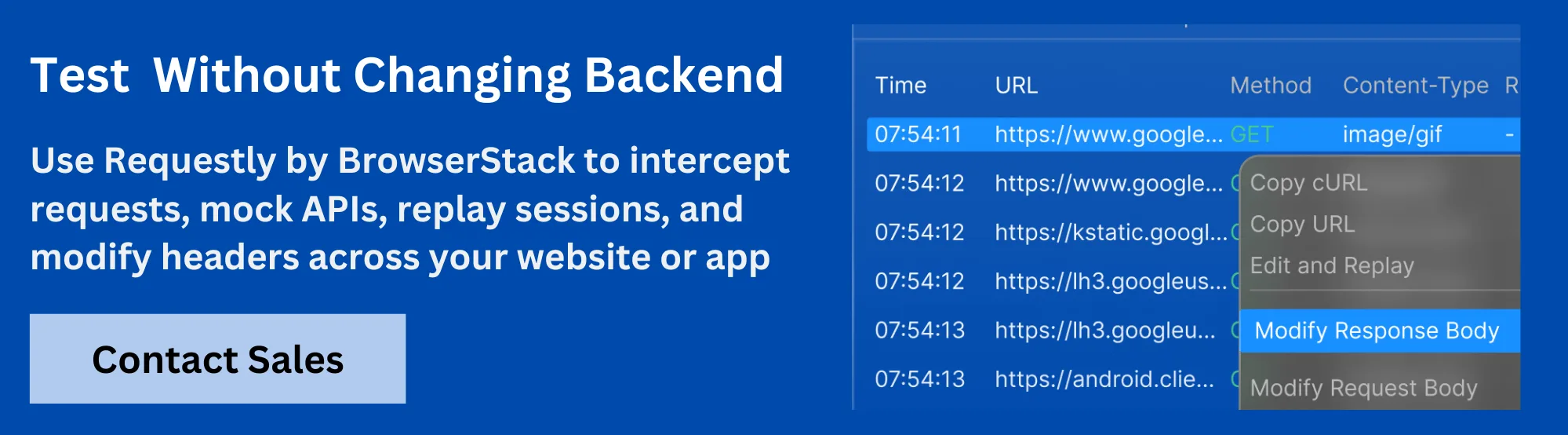

Using Requestly to Intercept and Inspect Chunked Responses

Debugging chunked encoding manually can be time-consuming, especially when working with streaming APIs or dynamic payloads.

Requestly HTTP Interceptor simplifies this by letting developers capture, inspect, and modify chunked HTTP traffic in real time, without complex proxy setup.

With Requestly, you can:

- View chunked response headers and body structure clearly

- Simulate or rewrite chunked responses for testing edge cases

- Inject headers, modify payloads, or delay chunks to mimic streaming condition

- Share debugging sessions with your team for better collaboration

This makes it easier to test how frontends or clients handle streamed data without relying on backend changes or system-level tools.

Chunked Encoding Support Across Browsers, Clients, and Servers

Chunked transfer encoding is widely supported across modern web stacks, but implementation can vary by environment.

Browsers:

- Most modern browsers support chunked encoding natively

- JavaScript’s fetch() API and ReadableStream allow processing streamed responses on the client side

HTTP Clients:

- Tools like curl, Postman, and Axios handle chunked responses automatically

- Low-level libraries in Node.js, Python, and Java offer manual control for testing and streaming

Web Servers:

- Nginx, Apache, Express.js, Spring Boot, and Flask support chunked encoding

- Some frameworks enable it automatically when content length is not set

Cross-platform support ensures chunked encoding works reliably across web, mobile, and backend applications. However, developers should still validate how proxies, CDNs, or gateways handle chunked traffic in production.

Common Errors When Handling Chunked Transfers

While chunked encoding is effective, misconfigurations or incomplete implementations can lead to issues. Common errors include:

- Missing or malformed chunk size: Clients may fail to parse responses if the size is not in valid hexadecimal.

- Omitted terminating chunk: Without the final 0\r\n\r\n, clients may hang or discard the response.

- Header conflicts: Using both Content-Length and Transfer-Encoding: chunked can lead to ambiguity or request smuggling risks.

- Proxy stripping or rewriting: Intermediaries may incorrectly buffer or remove chunked formatting, breaking streaming behavior.

Testing across environments and validating intermediate network components is essential for reliable chunked transfer handling.

Chunked Transfer in API Streaming and Live Data Scenarios

Chunked encoding is ideal for use cases where data is generated and delivered in real time. It allows APIs to push updates to clients without waiting for the entire dataset to be ready.

Common scenarios include:

- Server-Sent Events (SSE) or event streams

- Live logs, chat messages, or progress updates

- Partial API responses for pagination or search results

- Long polling endpoints in backend systems

By sending small, incremental updates, servers can keep clients responsive and reduce perceived latency in dynamic applications.

Chunked Encoding in Web Frameworks and CDNs

Many web frameworks and content delivery networks (CDNs) offer built-in support for chunked responses, but behavior may vary.

Framework support:

- Express.js and Spring Boot stream responses automatically when Content-Length is omitted

- Django and Flask allow generators or streaming responses for chunked delivery

- Node.js sends chunked data via res.write() by default when no content length is set

CDN behavior:

- CDNs like Cloudflare or Akamai may buffer or compress chunked content depending on cache rules

- Some CDNs strip or convert chunked responses unless explicitly configured to preserve streaming

It’s important to test chunked behavior in staging environments with your CDN or reverse proxy to ensure consistent delivery.

Chunked vs Gzip vs HTTP/2 Push: When to Use What

Each transfer method addresses different needs. Understanding when to use chunked encoding, Gzip compression, or HTTP/2 push helps optimize performance and delivery.

Feature | Chunked Encoding | Gzip Compression | HTTP/2 Push |

Purpose | Streams data in segments | Reduces size with compression | Preloads resources proactively |

Ideal for | Dynamic or streaming content | Static content or large payloads | Sending dependent assets early |

Overhead | Minimal | Adds CPU load for compression | Requires browser and server support |

Compatibility | HTTP/1.1 | HTTP/1.1 and above | Only supported in HTTP/2 |

Use chunked encoding for real-time streaming, Gzip for size reduction, and HTTP/2 push when optimizing asset delivery during initial page loads.

Best Practices for Testing and Simulating Chunked Transfers

Effective testing and simulation of chunked transfers are crucial for ensuring the robustness and performance of web applications. Adhering to best practices can help identify and resolve potential issues before they impact users.

Here are some best practices for testing and simulating chunked transfers:

- Understanding Chunked Encoding: Data is transferred in variable-sized chunks, ideal for when the total response size is unknown.

- Simulating Various Chunk Sizes: Test with diverse chunk sizes (small, medium, large) to uncover performance bottlenecks and ensure stability.

- Handling Network Latency And Packet Loss: Mimic network latency and packet loss to evaluate application resilience and recovery from incomplete reception.

- Testing With Malformed Chunks: Assess application response to incorrect sizes or invalid characters to harden against corruption and attacks.

- Validating Data Integrity: Verify received data matches original post-transfer using checksums or hash comparisons.

- Performance Benchmarking: Monitor transfer speeds, central processing unit (CPU) utilization, and memory consumption to optimize processing.

- Using Enterprise-Grade Platforms For Testing: Utilize platforms like BrowserStack for comprehensive, real-device testing and network simulation. Explore BrowserStack’s capabilities for enterprise testing to ensure applications perform flawlessly across all environments.

Security Considerations: Chunked Encoding Vulnerabilities

While chunked encoding offers flexibility in data transfer, it also introduces potential security vulnerabilities if not handled properly. Understanding these risks is crucial for building secure web applications.

Here are some common security considerations related to chunked encoding:

- Denial Of Service Attacks (DoS): Malformed or oversized chunks can exhaust server resources; enforce strict size limits.

- HTTP Desync Attacks: Differing chunk length interpretations between proxy and backend can lead to request smuggling; ensure consistent parsing.

- Content Spoofing: Improper chunk processing risks forged content display; validate chunk and message integrity.

- Resource Exhaustion: Incomplete chunked data can exhaust server resources; implement connection timeouts and limits.

- Bypassing Security Controls: Malicious payloads in chunks can bypass Web Application Firewalls (WAFs); ensure updated and advanced security configurations.

- Injection Attacks: Chunked data is a vector for SQL injection, cross-site scripting (XSS), and command injection; rigorously validate and sanitize all received data.

Conclusion

Chunked transfer encoding is a powerful feature of Hypertext Transfer Protocol that enhances the flexibility of data transmission on the web.

It enables servers to send data in a streaming fashion, particularly useful when the content length is unknown at the outset of the response.

However, its implementation demands careful consideration, especially concerning performance optimization, robust testing, and security. Addressing potential vulnerabilities and adopting best practices in development and testing is crucial.

Contents

- What Is Chunked Encoding in HTTP?

- Why Does Chunked Encoding Matter?

- How Chunked Transfer Works in HTTP/1.1

- Anatomy of a Chunked Response (With Header and Payload Example)

- Key Differences: Chunked Encoding vs Content-Length

- Real-World Use Cases Of Chunked Transfer Encoding

- Debugging Chunked HTTP Responses In Dev And QA Workflows

- Using Requestly to Intercept and Inspect Chunked Responses

- Chunked Encoding Support Across Browsers, Clients, and Servers

- Common Errors When Handling Chunked Transfers

- Chunked Transfer in API Streaming and Live Data Scenarios

- Chunked Encoding in Web Frameworks and CDNs

- Chunked vs Gzip vs HTTP/2 Push: When to Use What

- Best Practices for Testing and Simulating Chunked Transfers

- Security Considerations: Chunked Encoding Vulnerabilities

- Conclusion

Subscribe for latest updates

Share this article

Related posts

Get started today

Requestly is a web proxy that requires a desktop and desktop browser.

Enter your email below to receive the download link. Give it a try next time you’re on your PC!