How MCP Impacts API: A Comprehensive Analysis

LLMs have changed the way systems interact with APIs. The client isn’t a frontend or a service anymore; it’s a model that depends on structure, predictability, and clear capabilities. MCP sits in the middle of this shift, defining how models access tools and how those tools rely on APIs underneath.

This write-up breaks down what MCP is, how it changes API behaviour, how it differs from traditional interfaces, how developers use it, and where Requestly fits into the workflow.

What is MCP

MCP stands for Model Context Protocol, a standard that defines how large language models interact with external tools in a predictable and structured manner. Instead of trying to guess what functions exist or how to run them, this model gets a clear contract describing the actions it can call and the data it can access.

The easiest way to understand MCP is to think of it as a bridge. On one side is the model, and on the other side are the tools, scripts, APIs, and files that developers work with.

MCP defines a common language between the two, so there is no ambiguity about what is available or how anything should be executed.

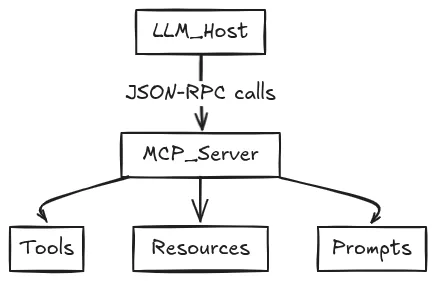

So, at the core of this protocol, MCP exposes three key elements, which are:

- Tools

Tools are explicit actions a model can execute, like fetching user data, running a diagnostic script, calling a backend service, or generating a file. Each tool here includes a schema describing required inputs and expected outputs. This ensures the model calls the tool correctly rather than improvising through natural language prompts. - Resources

Resources represent structured data that the model is allowed to read. These can include JSON files, configuration folders, environment variables, or logs. MCP reduces hallucination and keeps the model grounded in factual context by giving the model structured and real-world data. - Prompts

Prompts are reusable instruction templates that the model can request when initiating a workflow. They offer a standardized way to perform repeatable tasks such as analyzing logs and generating summaries or preparing release notes without depending on vague instructions.

MCP exists to solve the M×N integration problem.

Because without MCP, every tool would need custom connectors for every model, but with MCP, both sides follow a unified protocol, which reduces integration overhead and makes LLM-powered tooling far easier to maintain.

If we talk about behind the scenes, MCP uses a straightforward host-server architecture.

The host runs the LLM and requests actions, and the server exposes tools, resources, and prompts.

They communicate using typed JSON-RPC messages, which handle validation and ensure deterministic execution.

But when we practice, MCP gives the model the same clarity a developer gets from a well-documented API: defined boundaries, predictable behavior, and a controlled environment for running real operations.

How MCP Impacts APIs

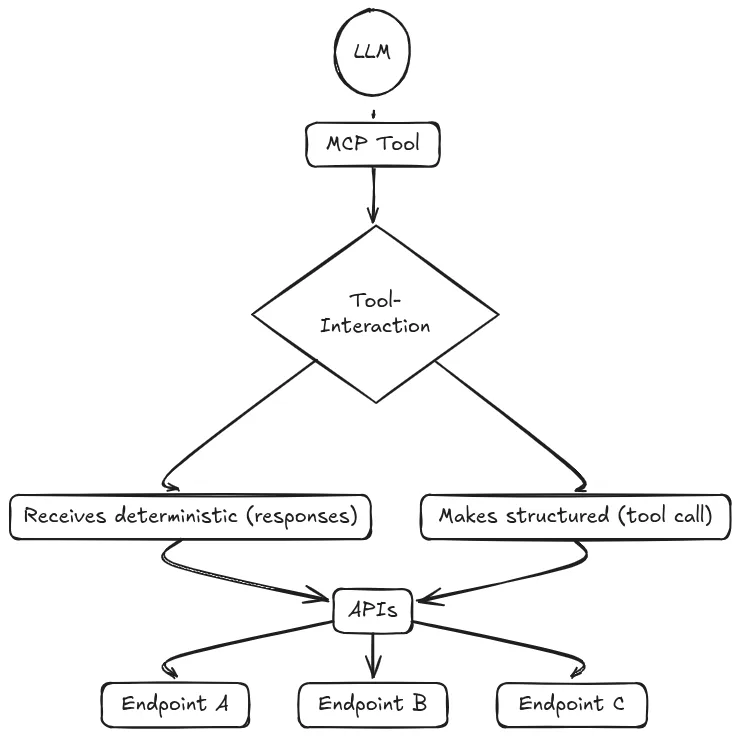

When you start working with MCP for the first time, one thing becomes obvious very quickly: the way it changes how APIs behave in an LLM-driven world, and it is not because MCP replaces APIs but because it changes who the “client” is and how that client expects APIs to respond.

MCP doesn’t replace APIs, but it does change how APIs are consumed, structured, and tested, especially when the caller is no longer a human-built client but an LLM making tool-driven decisions.

The biggest shift is that MCP expects APIs to behave in ways that are predictable for a model. LLMs rely heavily on patterns and structure, which means the APIs sitting behind MCP tools need to provide cleaner schemas, consistent responses, and deterministic behavior.

If two similar API calls return different shapes of data or inconsistent error messages, and a human developer can adjust, but an LLM cannot. It completely depends on strict patterns to avoid misinterpreting the result.

MCP also pushes API design toward smaller, action-level endpoints rather than broad, multipurpose ones. Since MCP tools wrap capabilities as discrete actions, it’s easier for a model to interact with APIs that expose clear, singular operations like /generate-report, /sync-user, or /validate-config, rather than overloaded endpoints that behave differently based on internal logic or input combinations. These action-oriented patterns align naturally with MCP’s “tool” abstraction.

Another impact is how MCP uses APIs behind the scenes. Even though the model interacts with a tool, that tool often calls an API internally to perform the actual work. MCP simply layers structure and context on top of the API. In other words, MCP becomes the coordination layer, but APIs remain the execution layer. This means the reliability of an MCP workflow still depends directly on the reliability of the underlying APIs.

Therefore, due to this dependency, MCP increases the need for healthy testing and controlled environments. Developers need to know how an MCP tool will behave when the API returns different states, such as success, failures, delays, malformed data, or changed schemas. This makes mocking, rewriting, and debugging far more important. If an MCP tool expects a specific schema and the API suddenly changes, the entire workflow can break, and models can produce incorrect decisions without any obvious error message.

Basically, in short, MCP brings APIs closer to an LLM’s execution path. It puts pressure on API consistency, encourages cleaner endpoint design, and makes testing essential because any shift in API behavior directly shapes how the model performs in real-world tools.

Difference Between MCP & APIs

When you look at MCP and APIs side by side, they feel similar at first. Both involve structured calls, inputs, outputs, and some form of contract, but the moment you dig into how they’re used, the differences become very clear.

The first major difference is how each one exposes functionality. An API exposes endpoints- URLs that represent resources or operations. MCP exposes actions or capabilities through tools.

Instead of thinking in terms of routes like /users/123 or /reports/generate, MCP thinks in terms of what the model should do- “fetch user,” “generate report,” “validate configuration.”

It’s centered around actions, not URLs.

The second difference is who the client is. APIs are designed for human-built clients- frontends, servers, and mobile apps. MCP is designed for LLMs. Models rely heavily on structure and schema clarity, so MCP forces every action to define strict input and output formats. That’s not always the case with REST APIs, where error messages, formats, or fields can vary based on implementation.

The third difference is state and context. APIs are stateless by design. Every call must contain everything needed to process it. MCP carries context. The model has access to previous steps, available resources, and the state of a workflow. MCP uses this context to make tool calls feel more like a sequence rather than isolated requests.

There’s also a clear security difference. APIs typically rely on tokens, auth headers, and role-based access. MCP adds a second layer of permissioned tool access, which explicitly controls what operations the model is allowed to trigger.

A simple comparison shows the difference clearly:

REST API call:

GET /users/123 -> returns a JSON payload with user details.

MCP tool call:

“callTool”: { “name”: “getUser”, “arguments”: { “id”: “123” } } -> the server handles the logic and returns structured output defined by the tool schema.

How MCPs Are Used

The real value of MCP shows up when you look at how developers are already using it inside tools, editors, and local workflows. MCP wasn’t designed as a theoretical protocol; it was intended to let LLMs work safely with real systems.

One of the most common uses is inside developer environments.

IDEs can expose tasks like running tests, searching logs, or generating code patches as MCP tools. Instead of a model guessing the right terminal command, MCP gives it a direct action- “runTests,” “openFile,” or “createDiff.” This keeps the workflow predictable and avoids accidental commands.

Another common use is exposing local scripts or utilities through MCP.

If a team has a Python script that cleans data or a CLI tool that validates configs, they can expose it as a tool in an MCP server. The model can then trigger these operations with structured arguments, which makes automation far easier without building a full API around every script.

MCP also plays well with debugging and inspection workflows.

Tools can allow the model to fetch logs, read config files, review error traces, or inspect recent activity directly from the development environment. Because resources in MCP are structured, the model can interpret them without guessing or misreading formats.

A simpler but powerful use case is workflow automation.

Models can chain MCP tools together to perform multi-step actions, for example, read a configuration file, validate it, generate a fix, and save the updated file. The protocol manages the context so the model knows what step came before and what data is available next.

Here is a minimal example to show what an MCP call might look like:

{

"callTool": {

"name": "createReport",

"arguments": {

"format": "pdf",

"includeLogs": true

}

}

}The server executes createReport, handles the logic, and returns structured output that the model can use.

How to Plug Requestly With MCP

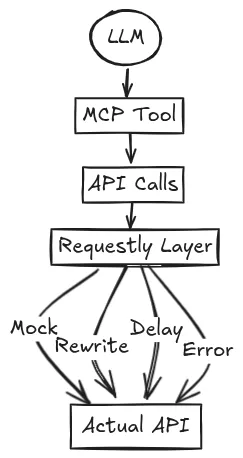

The moment MCP starts interacting with real systems, APIs become the backbone of those workflows. MCP tools often call APIs behind the scenes, like fetching data, validating inputs, synchronizing states, generating outputs, or triggering backend logic. And because LLMs rely on strict patterns, any variation in API behavior can directly affect how an MCP workflow performs.

This is where Requestly fits naturally into the picture.

When an MCP tool wraps an API call, developers need a way to control how that API behaves during testing. They need to simulate responses, inject delays, test error paths, or reproduce odd states that the MCP workflow might encounter. Requestly already solves these problems for frontend and backend teams, and the same capabilities extend cleanly into MCP-driven environments.

For example, if your MCP tool calls an internal endpoint like:

POST /validate-config

You can use Requestly to:

- Mock a successful response so the model can continue the workflow.

- Inject a failure to see how the MCP tool handles it.

- Delay the response to test how the model reacts under slow network conditions.

- Rewrite the returned data shape to check if the tool schema catches inconsistencies.

This is especially useful when schemas are strict, because MCP tools expect predictable output. If the real API suddenly changes a field name or structure, the model might silently misinterpret the result. By mocking those variations in Requestly first, teams can catch issues early.

The same applies to multi-step MCP workflows.

If a model reads a resource, calls a tool, and then triggers a backend API, Requestly can simulate each part of that chain. Developers can reproduce edge cases without touching production systems or rewriting parts of their MCP server.

In a practical sense, Requestly becomes the testing layer for MCP-powered integrations that helps teams understand how their tools behave when real APIs return different states.

Conclusion

MCP doesn’t replace APIs; it exposes how tightly models depend on their consistency. As tools get more structured and workflows get more model-driven, the cost of unpredictable API behaviour goes up fast. That’s why controlled environments, mocks, and rewrites stop being “nice to have” and become part of the development loop, and it’s where Requestly fits naturally.

The more MCP expands, the more important the ecosystem around it becomes.

Contents

Subscribe for latest updates

Share this article

Related posts