Running Hugging Face Models in Requestly

Your lightweight Client for API debugging

No Login Required

Requestly is a web proxy that requires a desktop and desktop browser.

Enter your email below to receive the download link. Give it a try next time you’re on your PC!

If you’ve ever tried experimenting with Hugging Face models through their Inference API, you know it’s not always smooth sailing.

Different models have different input/output schemas. Free-tier models have latency issues that makes you question whether its a problem with your setup or your model provider. API keys need to be passed on every request. And when you hit rate limits, you’re left wishing you should have created a better workflow while trying out these APIs.

Most devs reach for `curl`, Postman, or Insomnia to test things. That works fine for basic requests, but I’ve found those tools get in the way when you get the point of trying multiple models with different versions of your prompts.

That’s where Requestly come in.

Why not just Postman?

Good question. Postman is the giant in this space, so why bother switching?

Here’s what I found when working with Hugging Face APIs specifically:

Postman vs Requestly for Hugging Face APIs

Most developers reach for Postman or Insomnia first — and they’re great tools.

Where Requestly shines (especially for Hugging Face workflows) is in being local-first and Git-friendly.

| Feature | Postman / Insomnia | Requestly |

|---|---|---|

| Local-first | Accounts/cloud sync encouraged, offline available but secondary | Fully offline by default, no login required |

| Performance | Feature-rich but can feel heavy | Lightweight, fast boot |

| Git integration | Export/import collections manually | Requests are local JSON → can commit directly |

Step 1: Grab your Hugging Face token

- Create or log in to your Hugging Face account.

- Go to Settings → Access Tokens.

- Create a new token with

readpermission. - Copy it somewhere safe — we’ll need it to make the API requests.

Step 2: Make your first request in Requestly

Let’s try a text generation model (GPT-2).

- Open Requestly Desktop or Web app.

- Create a New Request with

POST. - Endpoint:

https://api-inference.huggingface.co/models/gpt2 - Header

Authorization: Bearer <YOUR_HF_TOKEN>;

Content-Type: application/json;

- Body

{

"inputs": "In 2030, DevOps engineers will"

}

Hit Send and you’ll get a JSON response like:

[

{

"generated_text": "In 2030, DevOps engineers will spend less time firefighting and more time building autonomous systems."

}

]

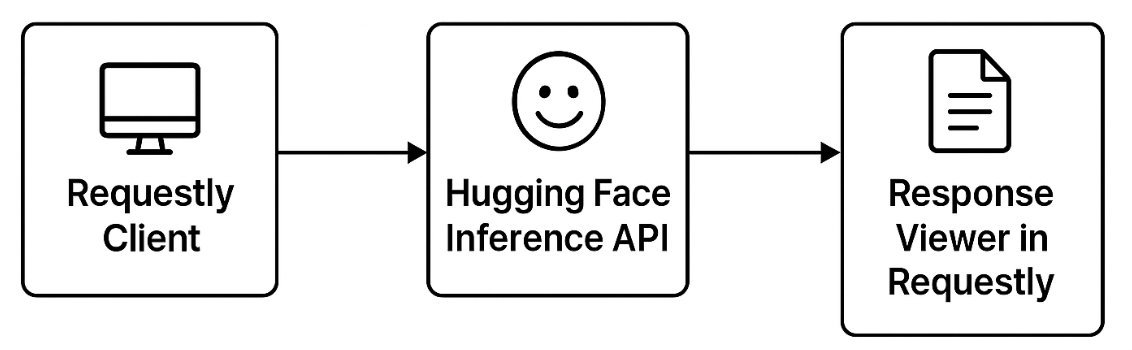

Here’s a quick look at how the flow works:

Requestly to Hugging Face API Flow

Step 3: Explore other models quickly

Instead of reconfiguring everything, just duplicate your saved request and swap the endpoint.

Sentiment analysis

https://api-inference.huggingface.co/models/distilbert-base-uncased-finetuned-sst-2-english

{

"inputs": "I love debugging APIs with Requestly!"

}

Response:

[

{ "label": "POSITIVE", "score": 0.999 }

]

Summarization

https://api-inference.huggingface.co/models/facebook/bart-large-cnn

{

"inputs": "Kubernetes is a system for automating deployment, scaling, and management of containerized applications..."

}

Image captioning

https://api-inference.huggingface.co/models/nlpconnect/vit-gpt2-image-captioning

{

"inputs": "https://huggingface.co/datasets/mishig/sample_images/resolve/main/airport.jpg"

}

Here's what Git integration actually looks like:

huggingface-tests/

├── ai-poc/

│ ├── sentiment.json

│ ├── summarization.json

│ └── image-captioning.json

└── README.md

Git workflow

git add ai-poc/sentiment.json

git commit -m "Add sentiment analysis request"

git push origin main

Now your Hugging Face API tests live in version control, right next to your app code. No bulky exports or workspace juggling.

Step 4: Deal with real-world API quirks

Playing with Hugging Face models isn’t always plug-and-play. Some challenges I’ve run into:

- Cold starts: Free-tier models might take 30+ seconds to spin up.

- Rate limits: Frequent requests can trigger 429 Too Many Requests. It is useful to be able to switch between models when rate limited

- Different schemas: Some models return arrays, others return nested objects. It becomes even worse when working with files and multimodal AIs

- Retries & errors: You’ll occasionally see 503 for overloaded models.

- Streaming outputs: Almost all LLMs need streaming support.

When a request fails, you can immediately hit Send again without reconfiguring anything – no need to scroll up in terminal history or re-export from another tool.

Step 5: Save and Reuse API

- Save them into a Collection

- Version control them in Git (great for teams)

- Share with colleagues just like you share code

{

"variables": {

"HF_TOKEN_DEV": "hf_dev_123...",

"HF_TOKEN_TEAM": "hf_team_456..."

}

}

Then in your headers:

Authorization: Bearer {{HF_TOKEN_TEAM}}

Switching from personal to team environments is literally one click. You can also selectively bring your requests along with you when you switch.

Now your Hugging Face experiments are reproducible and collaborative — not just throwaway curl commands.

A simple developer workflow

Here’s how I’ve been using Requestly with Hugging Face in practice:

- Prototype model requests in Requestly

- Save and organize them into collections

- Version them in Git for team use

- Once stable, export payloads into Python/JS for integration

This keeps the “exploration” phase fast and lightweight, and the “production” phase clean.

Wrapping up

Testing Hugging Face APIs doesn’t have to be a mess of curl commands, expired tokens, and inconsistent schemas.

If you already use Postman or Insomnia and they work for you, great. But if you want something lighter, local-first, and Git-friendly, Requestly is worth a shot.

Next time you’re experimenting with summarization, sentiment, or image captioning models, fire up Requestly, duplicate a request, and see results in seconds.

Contents

- Why not just Postman?

- Postman vs Requestly for Hugging Face APIs

- Step 1: Grab your Hugging Face token

- Step 2: Make your first request in Requestly

- Step 3: Explore other models quickly

- Here's what Git integration actually looks like:

- Git workflow

- Step 4: Deal with real-world API quirks

- Step 5: Save and Reuse API

- A simple developer workflow

- Wrapping up

Subscribe for latest updates

Share this article

Related posts

Get started today

Requestly is a web proxy that requires a desktop and desktop browser.

Enter your email below to receive the download link. Give it a try next time you’re on your PC!